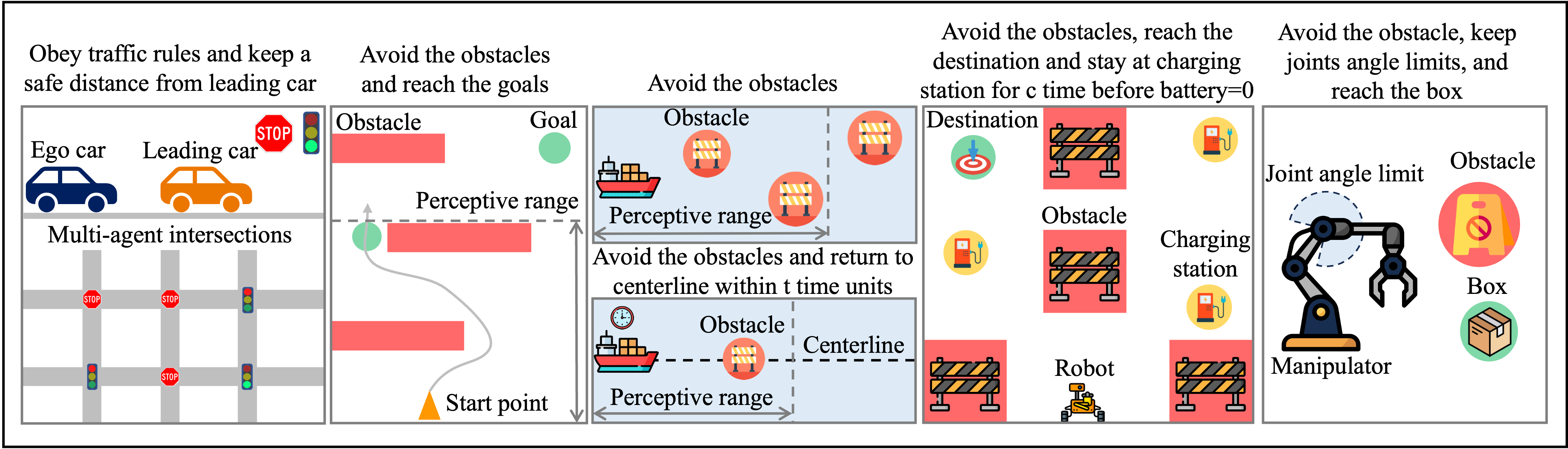

Ensuring safety and meeting temporal specifications are critical challenges for long-term robotic tasks. Signal temporal logic (STL) has been widely used to systematically and rigorously specify these requirements. However, traditional methods in finding the control policy under those STL constraints are time-consuming and not scalable to high-dimension and complicated systems. Reinforcement learning (RL) methods use hand-crafted or STL-inspired rewards to guide policy learning, which might encounter unexpected behaviors due to ambiguity and sparsity in the reward. In this paper, we propose Signal Temporal Logic Neural Predictive Control, which directly learns a neural network controller to satisfy the constraints specified in STL. Our controller learns to roll out trajectories to maximize the STL robustness score in training. In testing, similar to Model Predictive Control (MPC), the learned controller predicts a trajectory within the planning horizon to ensure the STL requirement in deployment. A backup policy is triggered to ensure safety when our learned controller fails to generate STL-satisfied trajectories. Our approach can adapt to various initial conditions and environmental parameters. We conduct experiments on five STL tasks. Our approach outperforms the classical methods (MPC and STL solvers) and RL methods in STL accuracy especially on tasks with complicated STL constraints, with a runtime 10X-100X faster than the classical methods.